In March 2025, the security operations center (SOC) of Pacific Rim Bank discovered that an attacker had silently exfiltrated credentials for its SWIFT transfer gateway. Forensic analysis traced the root cause to a single, highly-personalised email camouflaged as a board-level memo. The sender domain passed SPF and DKIM. The language echoed genuine corporate tone. Traditional gateways let it slide, and USD 12 million vanished in four hours.

This incident is a brutal reminder that spear-phishing detection sits at the heart of modern email threat intel. Conventional perimeter tools may block shotgun spam, yet they crumble when deception is laser-focused. Defenders need an approach that learns, adapts, and preserves evidence. That approach is supervised machine learning.

What Makes Spear-Phishing So Dangerous

Attackers research their victims for weeks, sometimes months. Public LinkedIn profiles, shredded conference badges, even archived press releases become bricks in their pretext. Humans are wired to trust messages referencing insider details—meeting topics, project codes, nicknames. Social engineering leverages that bias.

- Trust exploitation – forged reply chains hijack genuine threads, neutralising suspicion.

- Targeted deception – under ten recipients keeps volume below SIEM anomaly thresholds.

- Psychological pressure – urgency (“board needs figures in 30 minutes”) forces snap decisions.

For teams charged with phishing attack prevention and day-to-day email cybersecurity, this cocktail of psychology and stealth demands a new playbook. Deep-dive into manipulation tactics in our Social Engineering – Complete Roadmap. Our guide to Building a 24/7 Tier-1 SOC in Malaysia also shows how smart rota design limits fatigue—another factor attackers exploit.

Why Traditional Filters Fail

Legacy anti-spam engines rely on:

- Signature matching – MD5 hashes, URL blocklists, known bad IPs.

- Heuristic rules – trigger phrases (“verify account”), malformed HTML, excessive punctuation.

- Reputation scores – sender domain age, bulk-mail patterns.

These crumble against hand-crafted attacks. The malicious link resolves to a freshly minted domain. The body is polished corporate prose. Signatures never match, because every payload is unique.

Deterministic rules also lack context—they can’t correlate a sudden writing-style shift in an ongoing thread with historical mailbox behaviour. That blind spot fuels the pivot toward adaptive phishing email classification driven by true email threat intelligence.

Supervised Machine Learning for Spear-Phishing Detection

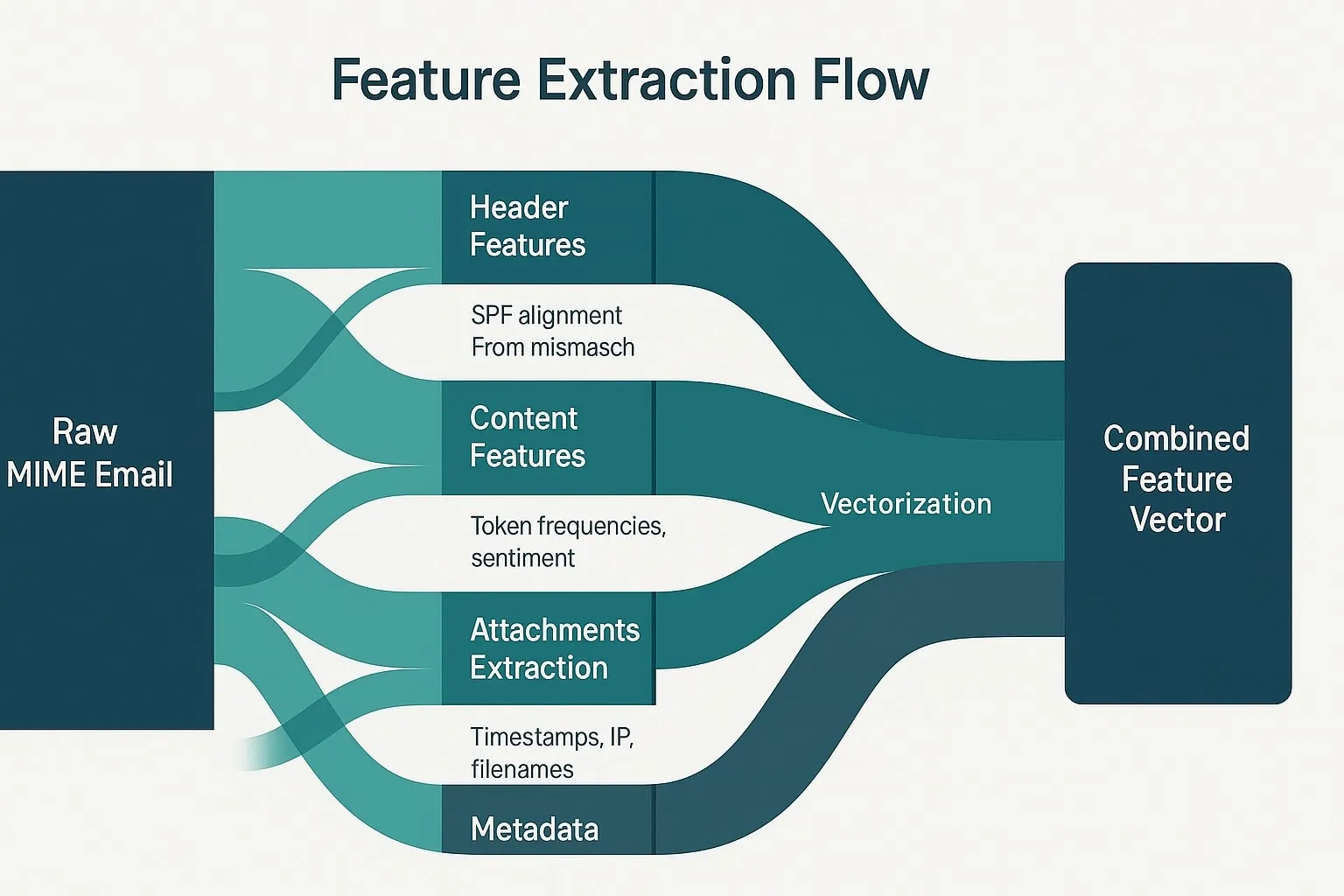

Supervised learning trains on pairs of feature vectors and labelled outcomes. Feed an algorithm thousands of emails tagged benign or malicious and it infers the decision boundary. Applied to spear-phishing detection, the workflow is:

- Ingest raw EML/MIME objects.

- Extract discriminative attributes—header anomalies, lexical cues, sender-recipient graph metrics.

- Vectorise via TF-IDF, one-hot encoders, or embeddings.

- Train Random Forest, SVM, or Gradient Boosting models.

- Validate with k-fold cross-validation and adversarial test sets.

- Deploy the best model to inline filters or SIEM enrichment.

Why supervise? Ground truth. In corporate environments we know which emails triggered incidents. Leveraging that clarity accelerates convergence and yields evidence auditors can understand.

| Algorithm | Strengths | Weaknesses |

|---|---|---|

| Random Forest | Handles mixed data, built-in feature importance | Larger memory footprint |

| Linear SVM | High margin, interpretable weights | Kernel/C tuning required |

| XGBoost | Top accuracy, fast inference | Opaque without SHAP |

import email, re, pandas as pd

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.ensemble import RandomForestClassifier

def preprocess(raw_email):

msg = email.message_from_string(raw_email)

subject = msg['Subject'] or ''

body = ''

if msg.is_multipart():

for part in msg.walk():

if part.get_content_type() == 'text/plain':

body += part.get_payload(decode=True).decode(errors='ignore')

else:

body = msg.get_payload(decode=True).decode(errors='ignore')

tokens = re.sub(r'[^A-Za-z]+', ' ', subject + ' ' + body).lower()

return tokens

vectorizer = TfidfVectorizer(ngram_range=(1,2), max_features=20_000)

X = vectorizer.fit_transform([preprocess(e) for e in emails])

rf = RandomForestClassifier(n_estimators=800, n_jobs=-1, class_weight='balanced')

rf.fit(X, labels)

This architecture embeds spear phishing machine learning into an SMTP sidecar with sub-millisecond latency.

Advantages

- Precision – ensemble models routinely exceed 98 % F1 on balanced corpora.

- Adaptability – weekly retraining digests new lure styles.

- Evidence – feature vectors persist for airtight digital forensics.

Feature Engineering and Public Datasets in Cybersecurity

Raw text is noisy. Crafting feature-engineered datasets turns chaos into signal.

| Category | Example Feature | Rationale |

|---|---|---|

| Header Integrity | From ≠ Return-Path, SPF fail | Spoofing indicator |

| Auth Alignment | DKIM match score | BEC often breaks DKIM |

| Semantics | Imperative verb density, urgency tokens | Social manipulation |

| HTML Structure | Hidden iFrames, <form> tags | Credential harvesters |

| Tone Shift | Sentiment delta vs. sender baseline | Anomalous aggression |

Public Corpora

- Enron Email Dataset – 500 K genuine corporate mails.

- Nazario Phishing Corpus – labelled spear and bulk phish.

- PhishTank – live phishing URLs for enrichment.

Store the raw MIME, feature vector, and label in a tamper-evident SQLite DB—vital for future digital forensics in cybersecurity.

From Lab to Production: Implementation Checklist

Deploying spear-phishing detection via machine learning is a DevSecOps journey:

- Data Pipeline Hardening – mirror SMTP via Postfix

always_bcc; hash PII pre-store. - Model Lifecycle Governance – 30-day retrain cadence with MLflow; p95 latency SLO < 2 ms.

- Security Controls – Git-signed commits; container-root‐fs read-only for incident-ready digital forensics.

- Human-in-the-Loop Feedback – weekly false-positive review injects new labels, tightening phishing attack prevention.

For container isolation tips, revisit our Home Web Server on Raspberry Pi 5.

Evaluating Model Effectiveness: Beyond Accuracy

Spear-phishing prevalence is ≈ 0.03 % of corporate mail. A naïve “always benign” model scores 99.97 % accuracy—useless. Use:

- F1-Score – balances precision/recall.

- MCC – robust under imbalance.

- Campaign-level AUROC – cluster by payload hash to gauge wave detection.

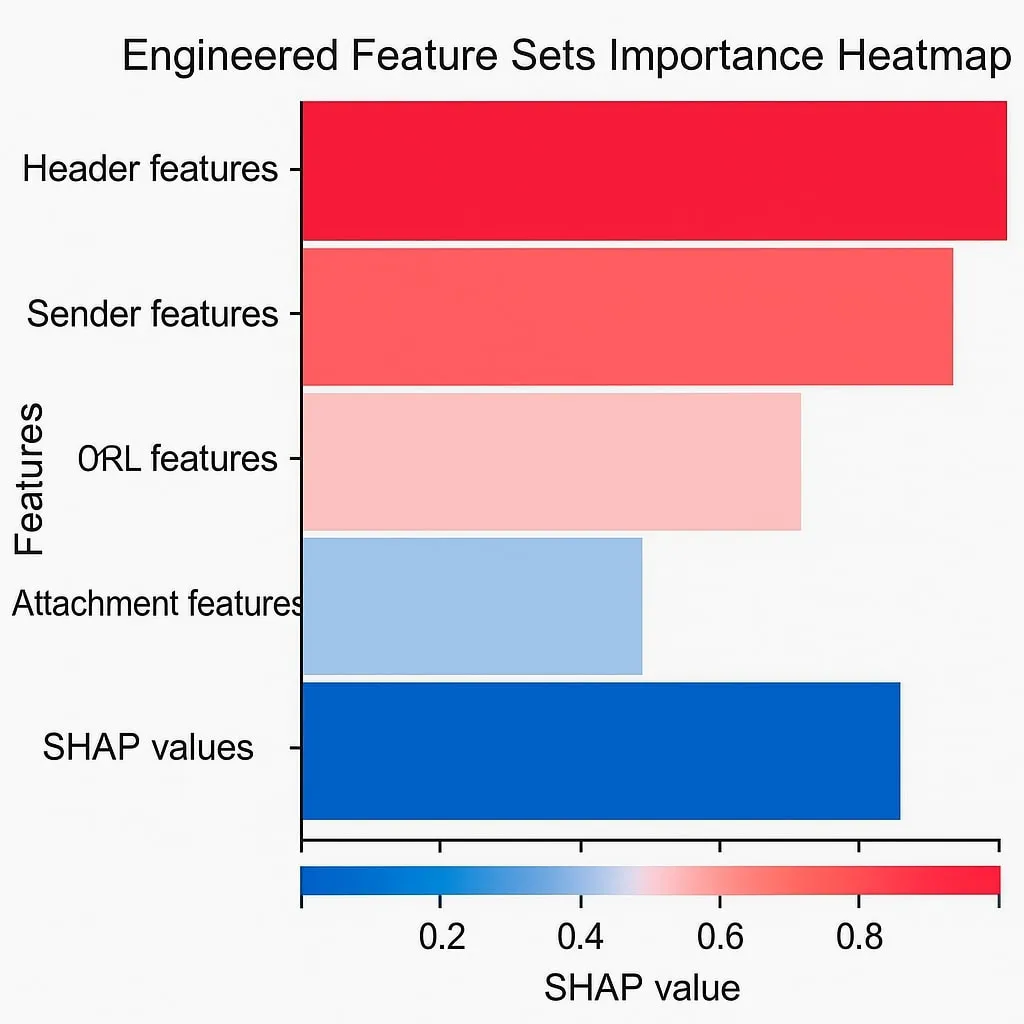

At Pacific Rim Bank, Random Forest hit 0.96 F1, versus 0.71 for the rule-based gateway. SHAP charts pinpointed tokens like “per your urgent request”, evidence later used in court.

Free Frameworks and Tools Worth Your Time

| Purpose | Tool | Notes |

|---|---|---|

| Feature Extraction | Apache Tika | Parses 1,400 + file types |

| Vectorisation | Scikit-learn TF-IDF | Battle-tested, Pandas-friendly |

| Model Training | XGBoost | Handles sparse matrices at speed |

| MLOps | MLflow | Version-controls machine learning pipelines |

| Intel Feeds | PhishTank API | Adds real-time email threat intel |

Deploy these with our Portainer stack tutorial for zero-downtime updates.

Use Cases in SOCs, Investigations, and Real-Time Detection

SOCs integrate the classifier behind their MTA. Scores ≥ 0.80 trigger quarantine plus a SOAR playbook:

- Open JIRA ticket.

- Retro-hunt 30 days of mail.

- Push indicators to TIP.

Analysts trust the system because top-N contributing features show exactly why a message was blocked—crucial for compliance. For a career roadmap that leverages such tooling, read our Ultimate Guide to SOC & SIEM Careers 2025. If you’re weighing SIEM versus SOAR integrations, see SIEM vs. SOAR: Which One Do You Need?.

Mapping to MITRE ATT&CK and Compliance

The technique aligns with MITRE ATT&CK T1566.002 – Spearphishing Link. Document this mapping in your runbooks to satisfy ISO 27001 A.5.10 and MAS TRM 9.1.4—cementing phishing attack prevention inside recognised frameworks.

Advanced Research Directions

- Graph Neural Networks – treat email threads as graphs; node embeddings capture relational trust.

- Contrastive Learning – pre-train on unlabeled corpora, fine-tune for spear-phishing detection.

- Domain-Adaptive Transfer – cross-train between verticals (finance ↔ healthcare) using federated learning on engineered feature sets.

- Edge Inference – Rust-based scoring inside an MTA sidecar trims latency, vital for proactive email cybersecurity.

Progress demands a fusion of data science, threat intel, and compliance—the very ethos of spear phishing machine learning.

Case Study: Pacific Rim Bank Incident Timeline

| Time (UTC+8) | Event | Detection Layer |

|---|---|---|

| 09:02 AM | Phish email lands titled “Q1 Governance Metrics” | MTA |

| 09:04 AM | Inline ML scores 0.87, quarantines message | spear-phishing detection |

| 09:05 AM | SOAR playbook fires, enriches with email threat intel | SOAR |

| 09:12 AM | Analyst sandboxes mail; URL leads to credential harvester | Sandbox |

| 09:18 AM | Indicators retro-hunted—no prior hit | SIEM |

| 10:44 AM | Second attempt blocked; evidence logged for digital forensics | Web Proxy |

Key takeaways:

- Missing X-Mailer header—a common toolkit artefact—spiked the model’s score.

- Filename deviation (

.docxvs historical.xlsx) surfaced via engineered features. - Post-incident retraining lifted recall another 0.7 %.

In 2023 a similar lure sailed through legacy filters for 36 hours. The ROI on proactive machine learning is therefore not hypothetical—it’s ledger-visible.

Conclusion and Forward-Looking Insights

Machine-learned spear-phishing detection is no longer experimental; it’s operational reality. Marrying supervised machine learning with engineered feature sets elevates phishing email classification far beyond static heuristics, arming defenders with adaptable, evidence-rich tools.

Next frontiers:

- LLM-assisted enrichment – large language models summarise intent, boosting email threat intel workflows.

- Federated learning – privacy-preserving cross-enterprise training.

- Explainable AI – SHAP graphs embedded in quarantine dashboards.

- Edge scoring – instant verdicts before the email hits the inbox.

Attackers thrive on defender inertia; data-driven defenders flip that asymmetry.